type

status

date

slug

summary

tags

category

icon

password

The "screenshot-to-code" project is an open-source tool that generates HTML/Tailwind CSS code from screenshots, making it easier to replicate competitor websites. It utilizes the gpt-4-vision API to recognize images and generate corresponding HTML code. The project consists of frontend and backend components, with the backend using gpt-4-vision for code generation. The post provides instructions for running and deploying the project and includes additional references to similar functionality in other projects.

screenshot-to-code

Recently, while browsing Github, I came across an impressive open-source project called "screenshot-to-code". It can generate HTML/Tailwind CSS code from screenshots, which makes it much easier to imitate a competitor's website. The URL for the project is https://github.com/abi/screenshot-to-code.

Technical Principle

The main technology used is the

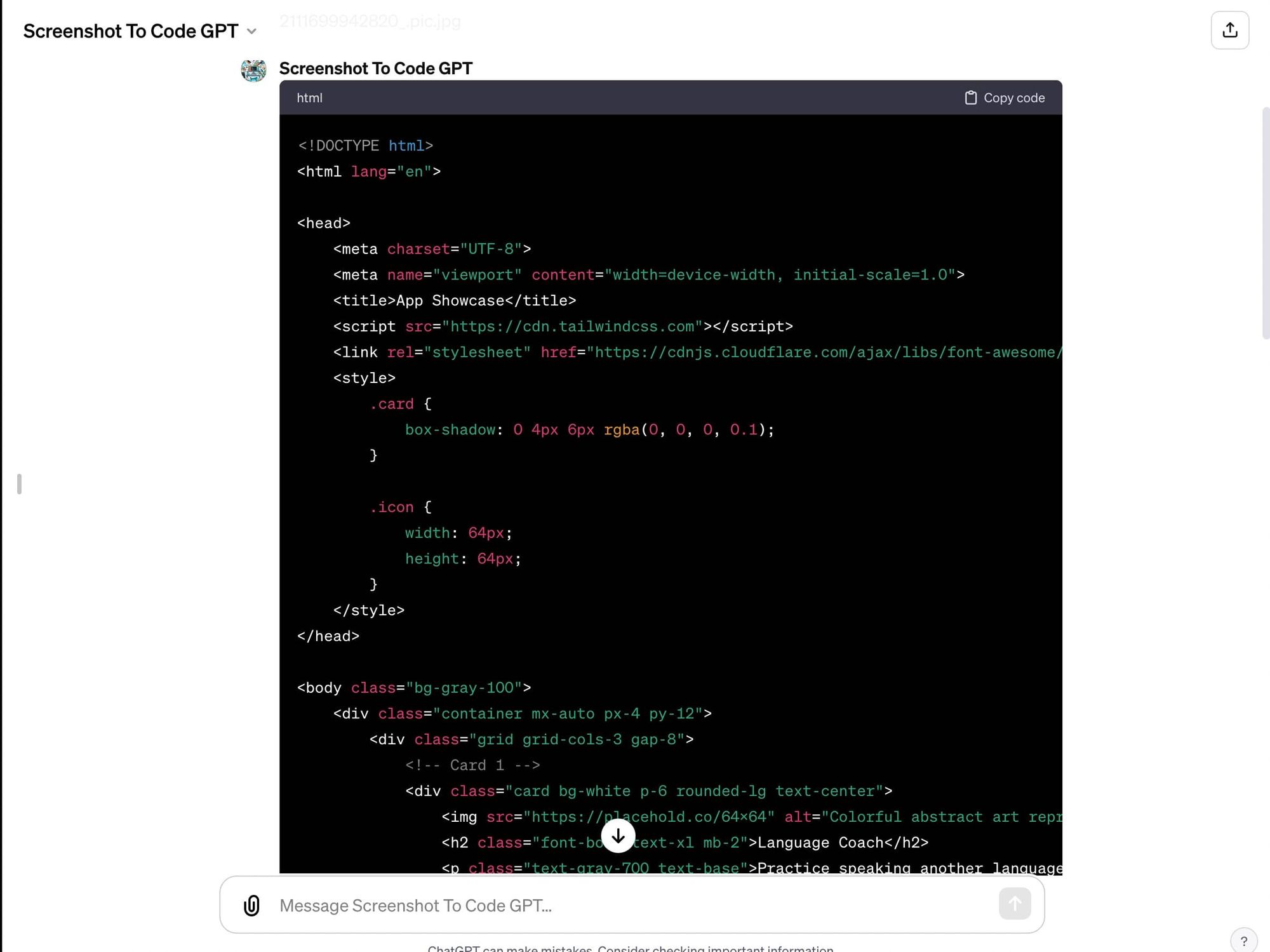

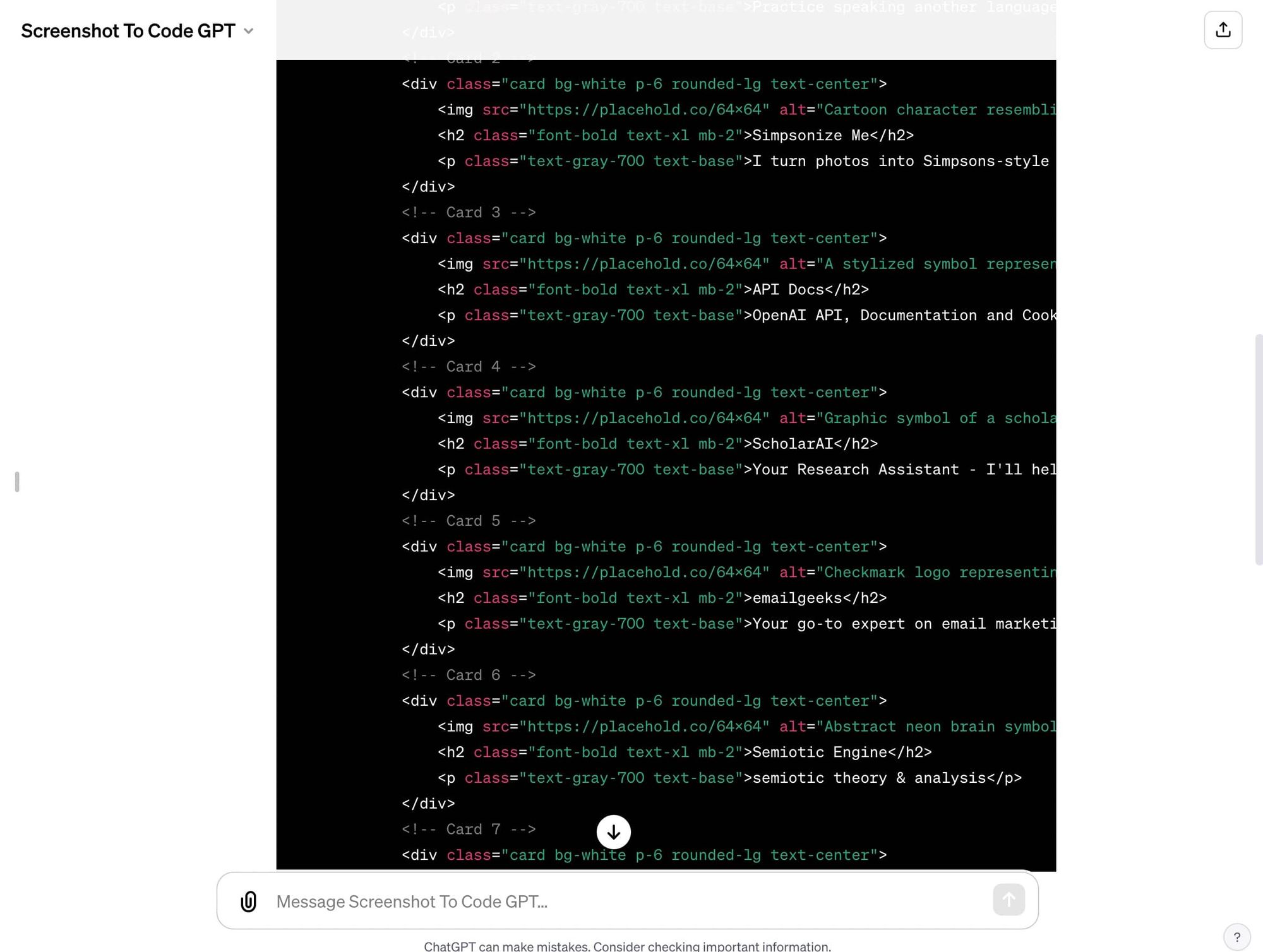

gpt-4-vision-preview API to recognize images and, combined with prompts, directly generate the corresponding HTML code.The project is divided into frontend and backend. The most crucial part of the frontend is converting your screenshot into a base64 string and then sending it to the backend interface via a websocket.

Upon receiving the base64 image, the backend uses

gpt-4-vision for code generation. For more information on vision-related usage, please refer to the documentation: https://platform.openai.com/docs/guides/vision.Let's take a closer look at the prompt for converting a screenshot to code:

Let's examine the content of

SYSTEM_PROMPT:This is the core part, acting as a professional Tailwind developer, using

Tailwind, HTML, and JS to build single-page applications, with additional constraints to avoid meaningless content from GPT.Running and Deployment

Run the command

git clone https://github.com/abi/screenshot-to-code.git in your terminal to clone the entire project.Then switch to the screenshot-to-code directory, and then to the respective backend and frontend directories, and run the corresponding code.

For the backend:

You need to enter your

OPENAI_API_KEY, ensuring it has gpt-4-vision access.For the frontend:

Open

http://localhost:5173 to use the app.If you prefer to run the backend on a different port, update VITE_WS_BACKEND_URL in frontend/.env.local.

If you encounter any issues, you can check the author's Github issue for relevant discussions or contact them on Twitter or open a new issue.

Additional References

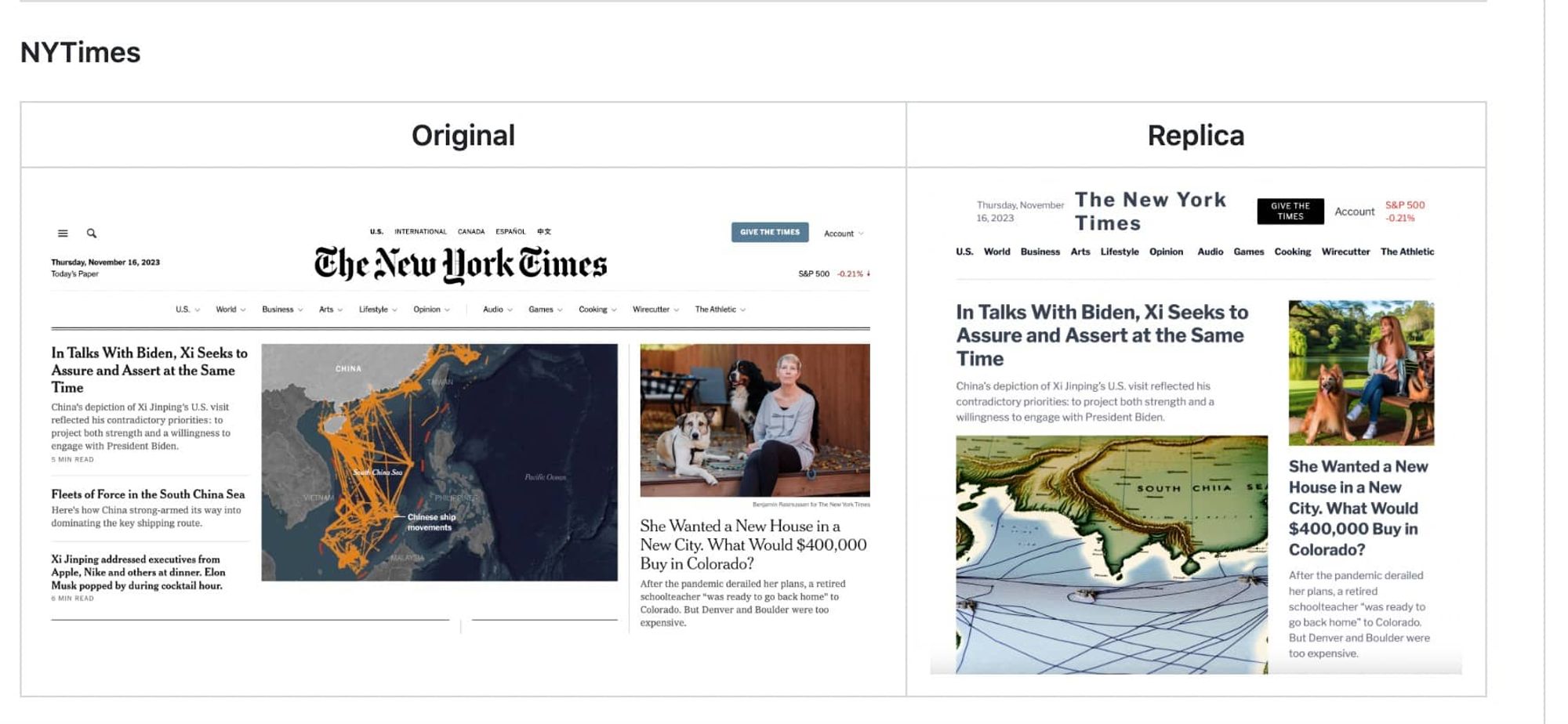

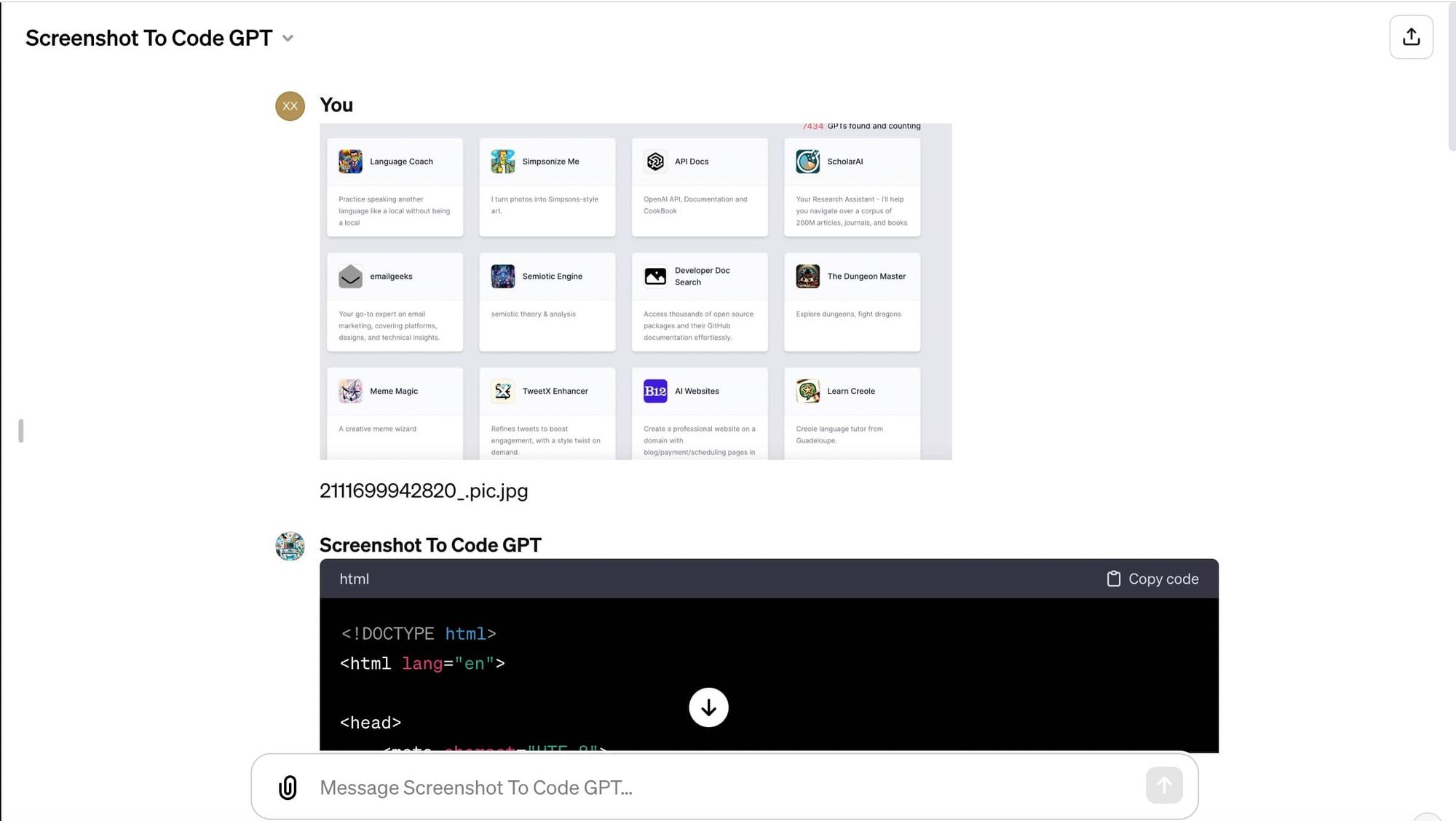

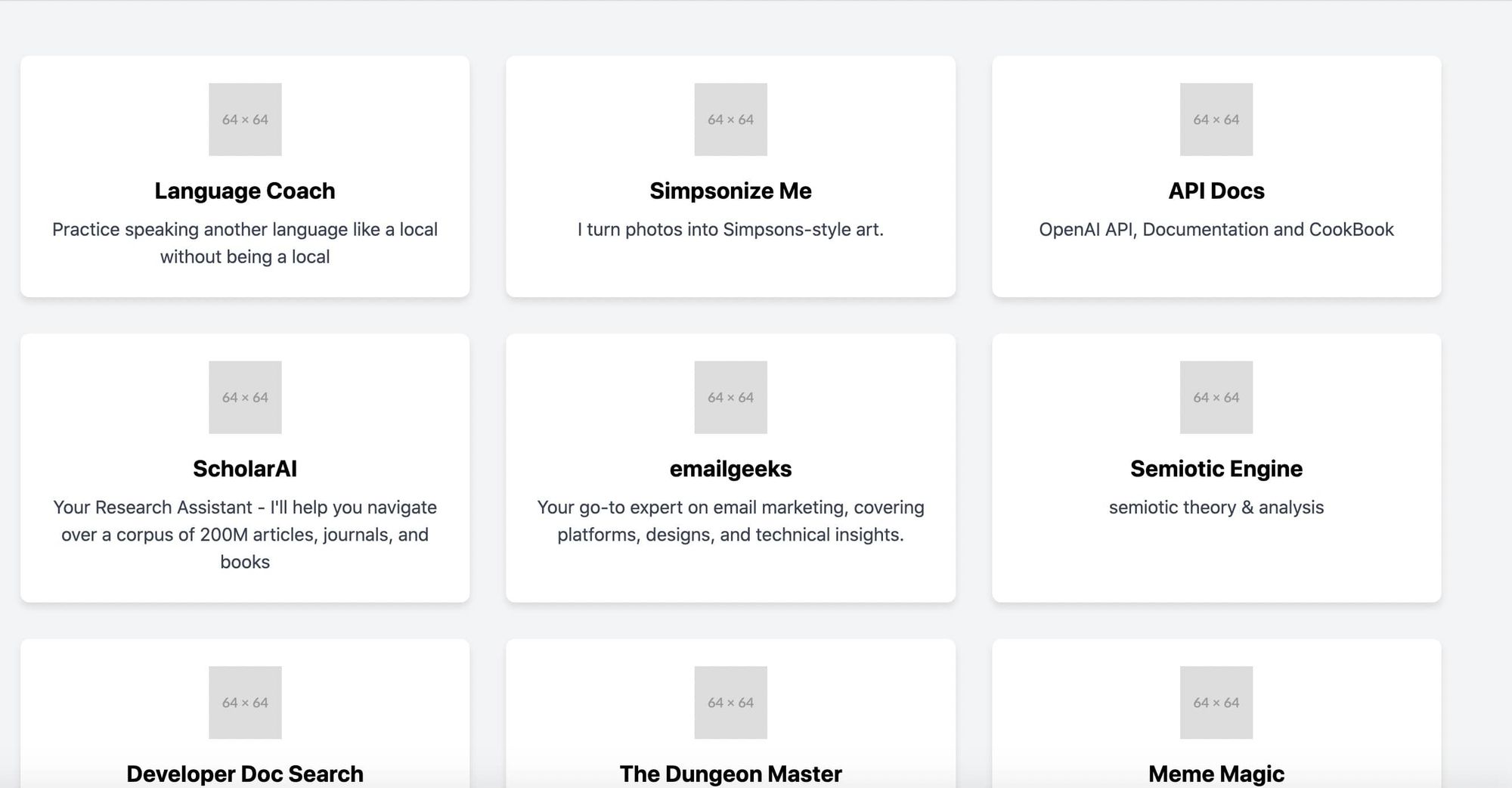

Apart from this project, I also found a similar functionality in a screenshot-to-code project on GPT Store, available at https://chat.openai.com/g/g-hz8Pw1quF.

I uploaded a website screenshot, and you only need to be a Plus member to use it; no

OPENAI_API_KEY is required. You can observe the results for yourself.

The generated single-page HTML, when previewed in Chrome, looks very realistic.

Feel free to try it out!

- Author:AwesomeAI

- URL:https://www.awesomeai.online/article/screen2code-generate-html-tailwind-css-code-from-screenshots-with-ai

- Copyright:All articles in this blog, except for special statements, adopt BY-NC-SA agreement. Please indicate the source!